Intrascale™ Nimrod Compute Cluster: More Tests Per Dollar Than Any Cloud Alternative

Fully amortized on-premise HPC obliterates cloud rental and SaaS platforms on cost-per-run — giving clients more CFD iterations per budget than any competing service. 640 EPYC cores, 10x NVIDIA RTX 8000 GPUs, 100Gb/s Infiniband, and zero egress fees.

More Iterations, Better Results, Lower Cost

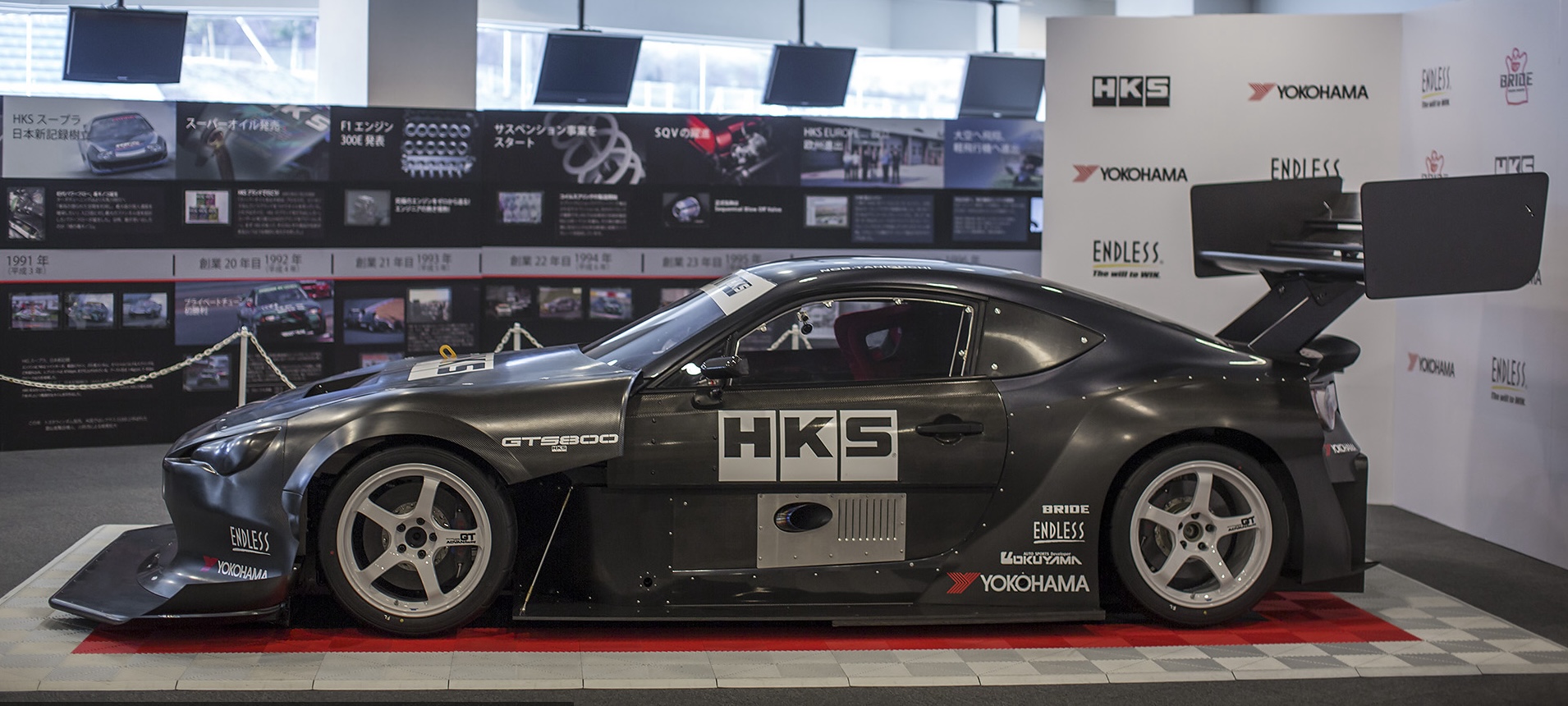

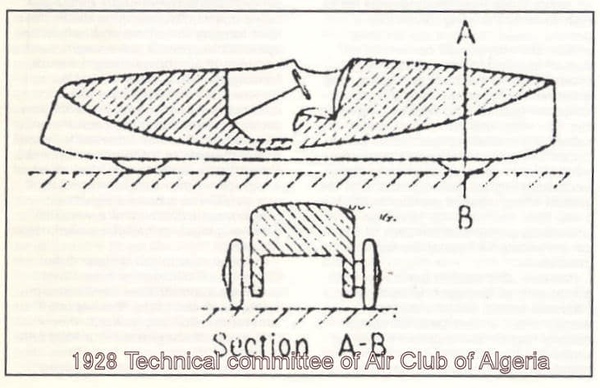

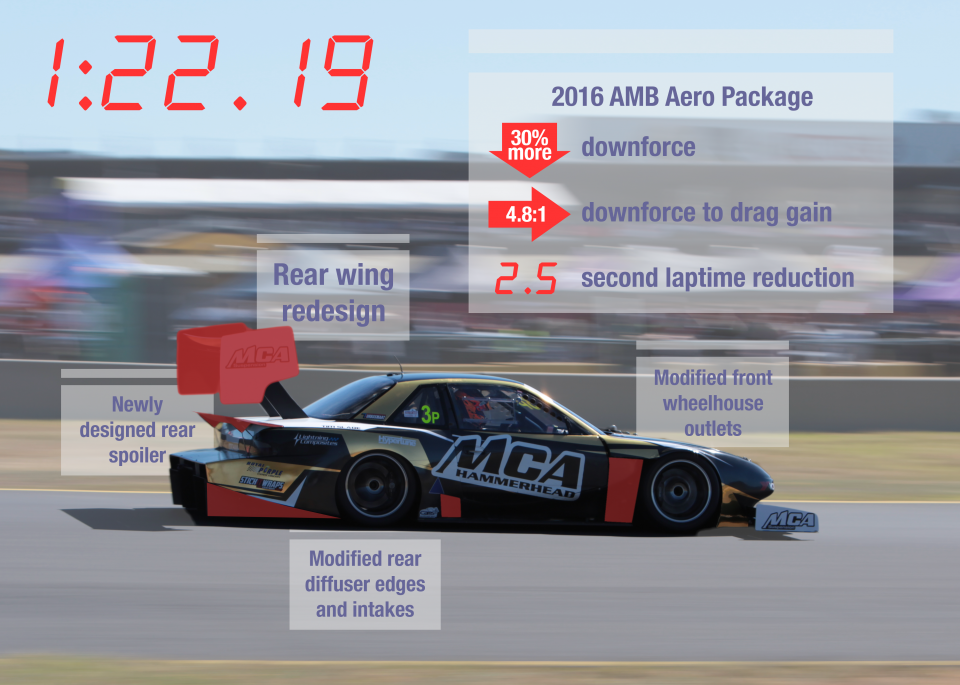

Aerodynamic development is won on test count. The team that runs more iterations inside the same budget finds more downforce, less drag, and fewer surprises at the track. Running HPC workloads at this scale on cloud infrastructure costs tens of thousands of dollars per month. Because the Intrascale™ Nimrod cluster is fully amortized on-premise hardware, our per-run cost is 10–30x lower than cloud rental or SaaS platforms. That cost advantage translates directly to iteration count — our clients run 10–30x more CFD simulations for the same budget.

And the value doesn't stop at compute. Results are delivered through our interactive 3D visualization portal and immersive VR review sessions — capabilities that would cost tens of thousands in cloud data egress fees alone. With Nimrod, there are zero egress charges, zero third-party data exposure, and zero compromise on deliverable quality.

What Is Intrascale™?

Intrascale™ means supercomputer performance at office scale. Nimrod operates in a standard office environment — no dedicated server room, no industrial cooling, no acoustic isolation. The noise and heat output are managed to levels compatible with a normal working space. This is a full HPC cluster that fits where a small business actually works, delivering peak computational performance with the running costs of an office appliance, not a data centre.

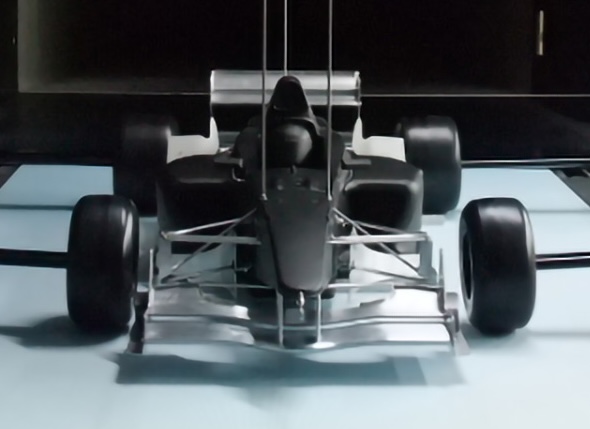

Purpose-Built Infrastructure for Computational Aerodynamics

Applied Dynamics Research announces the Intrascale™ Nimrod Compute Cluster — a custom-engineered high-performance computing platform designed specifically for production CFD workflows and advanced simulation workloads.

Hardware Specifications

- Compute: 640 cores across 5 nodes — Dual AMD EPYC Milan 64-core processors per node

- GPU Acceleration: 2x NVIDIA RTX 8000 per node (10 total) with 480GB total VRAM

- Memory: 1.28TB system RAM — 256GB ECC per node

- Network: 100Gb/s Infiniband EDR fabric via Mellanox SB7790 switch

- Storage: NVMe flash tier with parallel filesystem for checkpoint/restart

Custom BIOS Tuning

Peak CFD throughput required more than off-the-shelf hardware. Custom BIOS configurations across all nodes were key to unlocking sustained performance — memory timings, NUMA topology, and power delivery tuned specifically for the memory-bandwidth and inter-node communication patterns of large-scale CFD solves. This is not a generic rack; every layer is optimised for one job.

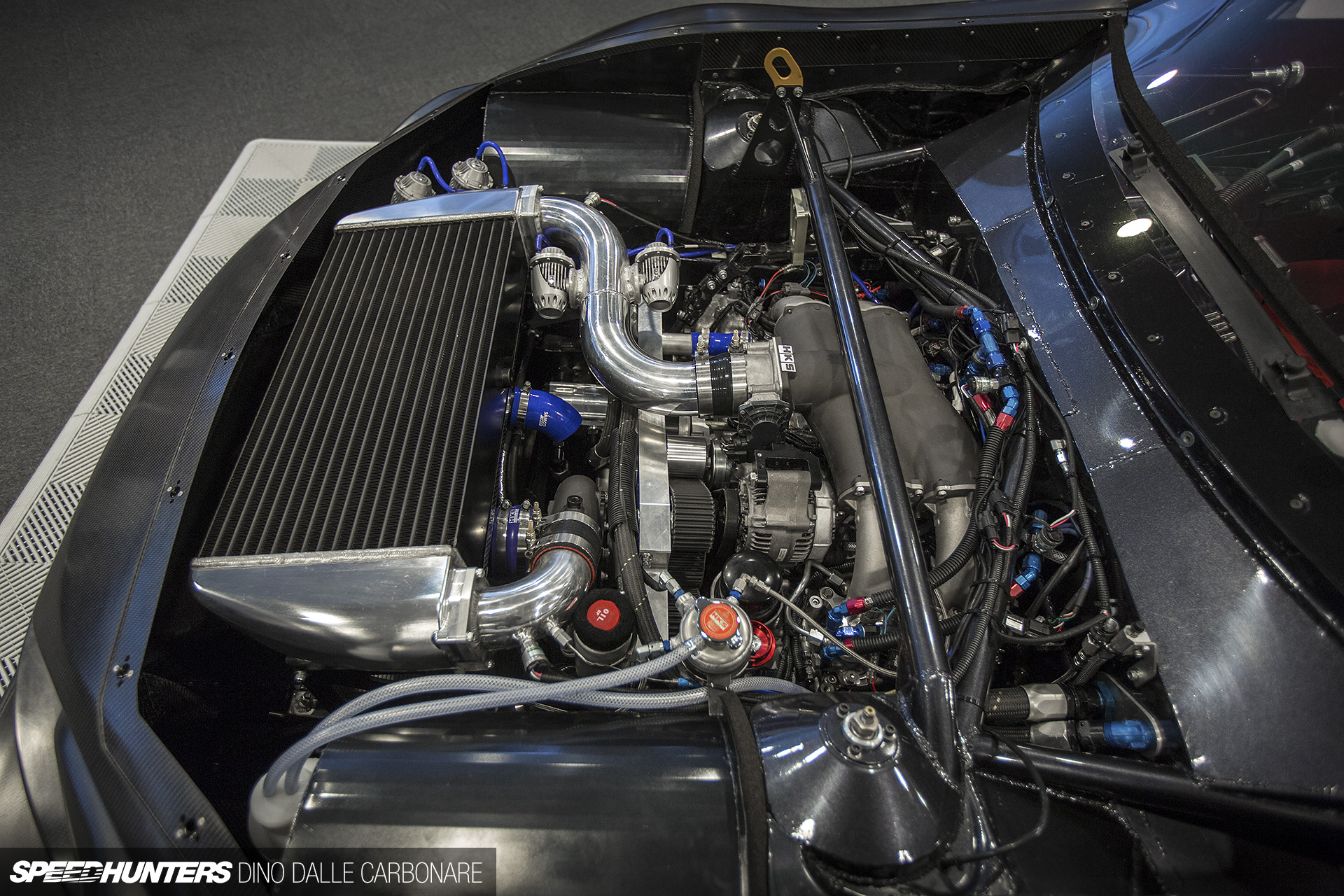

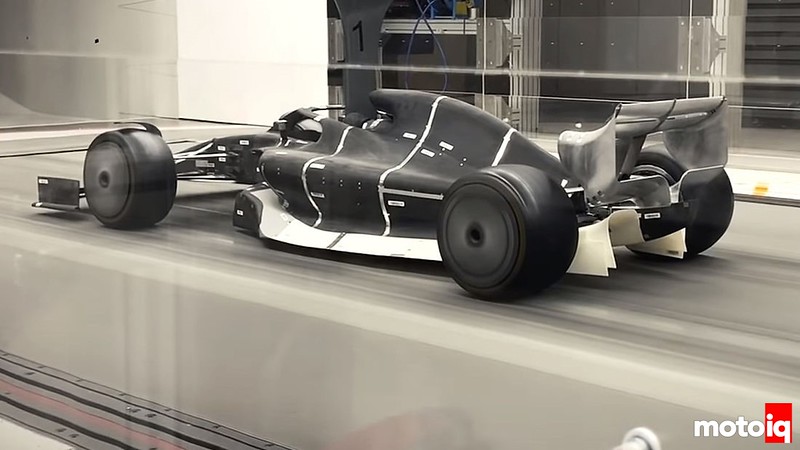

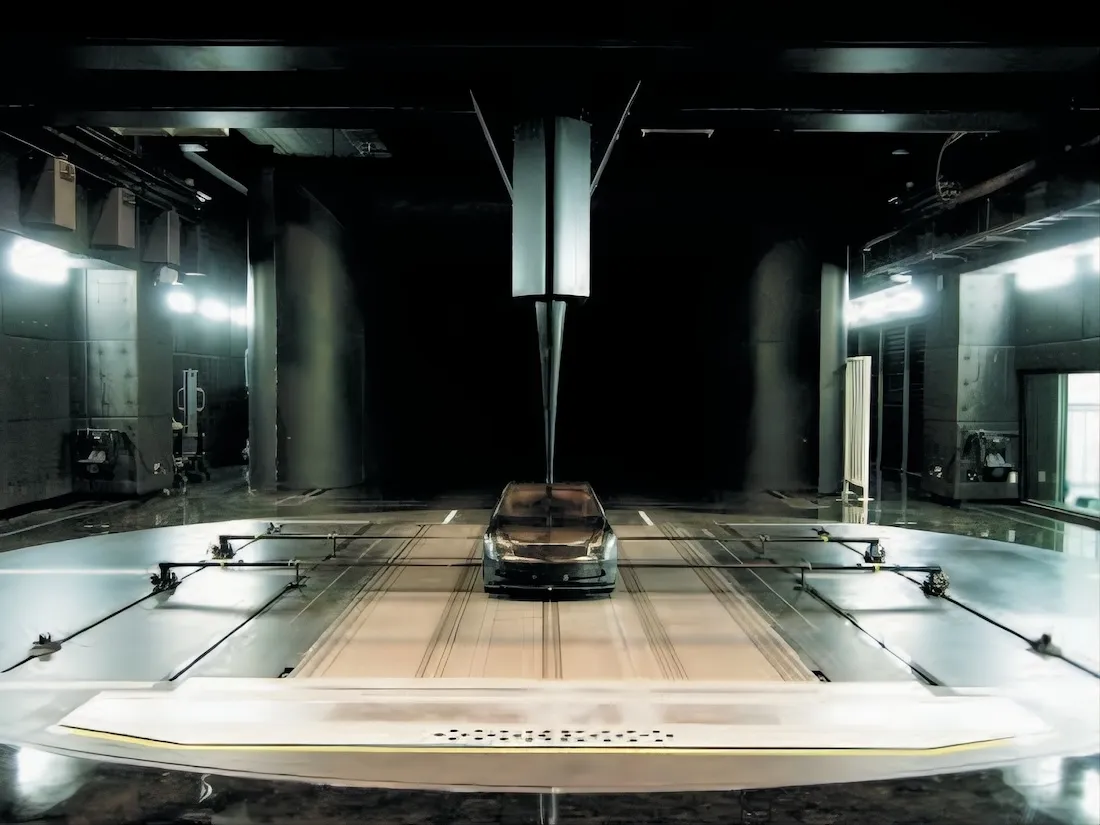

Software Stack — In-House Custom CFD Solvers

Nimrod runs in-house custom CFD solvers developed and coupled directly to this hardware, alongside ANSYS Fluent with custom solver modifications. This tight integration between code and machine — automated mesh refinement, proprietary turbulence model calibrations validated against wind tunnel data from Nissan Technical Center — extracts performance that generic cloud instances cannot match.

Complete automation system handles on-demand cluster power management, job scheduling, and results post-processing — reducing engineer time-to-insight while maintaining full traceability.

Local AI Infrastructure

Frontier LLM models run entirely on-premise using DeepSeek R1 for research assistance and NDA-protected dataset analysis. Client data never leaves our facility — no cloud services, no third-party processing, no data exposure risk.

Production Capacity

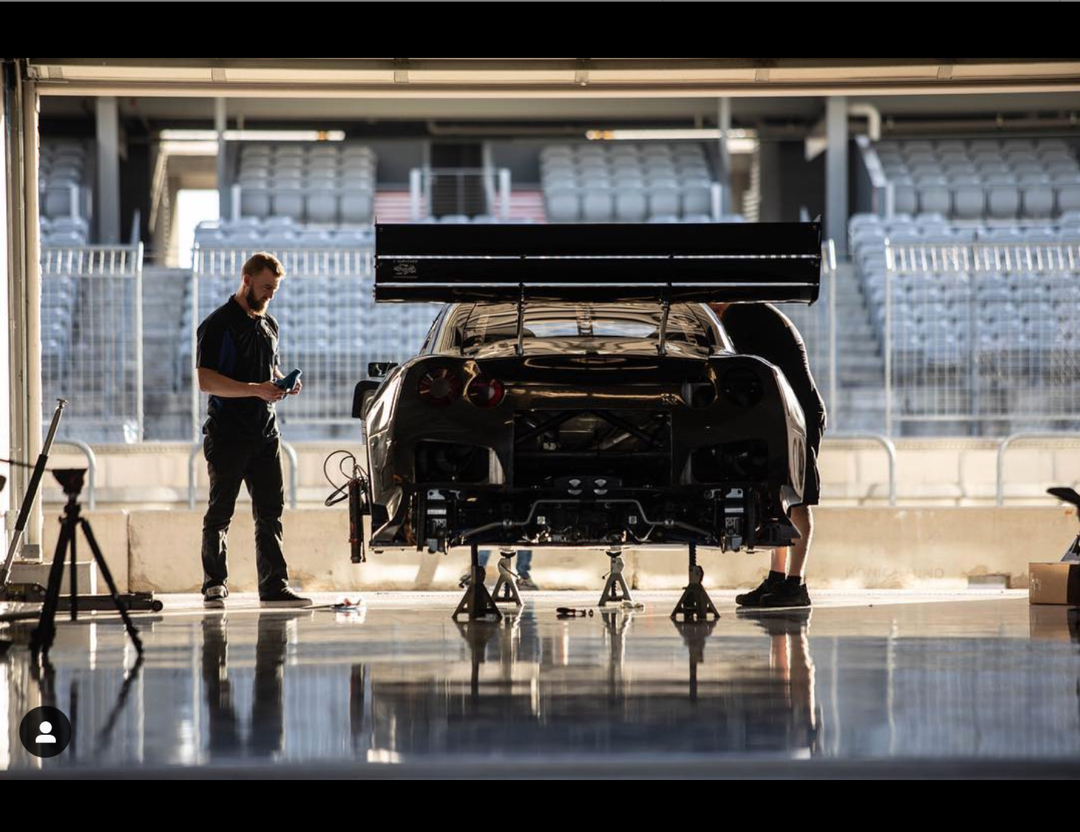

Nimrod delivers the computational throughput to run full-vehicle CFD studies with rotating wheels, detailed underbody, and complete thermal management systems. Typical turnaround: initial results within 24 hours, full optimization studies within one week.

Cost Analysis: On-Premise vs. Cloud vs. SaaS

We frequently encounter questions about cloud computing alternatives. The following comparison reflects actual production costs for professional-grade CFD work:

| Capability | Intrascale™ Nimrod | AWS HPC | SaaS Platform* |

|---|---|---|---|

| Monthly Infrastructure Cost | 1× (baseline) | 20–50× | 3–6× |

| Cost per Full-Vehicle Run | 1× (baseline) | 15–30× | 3–10× |

| Mesh Cell Count | 80–200M cells | Unlimited (cost scales) | 5–20M cells |

| Prism Layer Control | ✓ Full (y+ targeting) | ✓ Full | ✗ Automated only |

| F1-Grade Mesh Density | ✓ Standard | ✓ Available | ✗ Not supported |

| Rotating Wheels / MRF | ✓ Full support | ✓ Full support | ✗ Limited/none |

| Custom Turbulence Models | ✓ Wind tunnel validated | ✓ User-defined | ✗ Preset only |

| Data Security | ✓ Always air-gapped | ~ Cloud-dependent | ✗ Third-party servers |

| Data Delivery Platform | ✓ Custom portal + VR | ~ S3 download | ✗ Static images/PDF |

| Data Egress Costs | ✓ None (local delivery) | ✗ Per-GB charges add up | ~ Limited exports |

*Representative of browser-based CFD services. Specific capabilities vary by provider.

Methodology Requirements

Professional-grade simulation demands professional-grade input. Our workflow requires watertight, analysis-ready CAD geometry — surfaces must be properly trimmed, gaps closed, and intersections resolved before meshing. This preprocessing discipline eliminates the ambiguity that automated platforms mask with approximations.

The result: predictable, repeatable, wind-tunnel-correlated data. When the input is rigorous, the output is trustworthy.

Performance Benchmarks

The cluster delivers over 50 TFLOPS of computational performance, enabling simulation of complex aerodynamic phenomena previously impossible to resolve. The system runs advanced RANS and LES turbulence models, capturing transient flow structures and vortex shedding with microsecond temporal resolution. Mesh densities exceeding 500 million cells allow accurate prediction of boundary layer transition and separation.

This computational power translates directly to client success — development cycles reduced by 60%, with virtual testing replacing expensive wind tunnel hours. Recent projects have achieved drag prediction accuracy within 1% of experimental measurements.

For partnership and compute access inquiries, contact us directly.

Applied Dynamics Research

Applied Dynamics Research